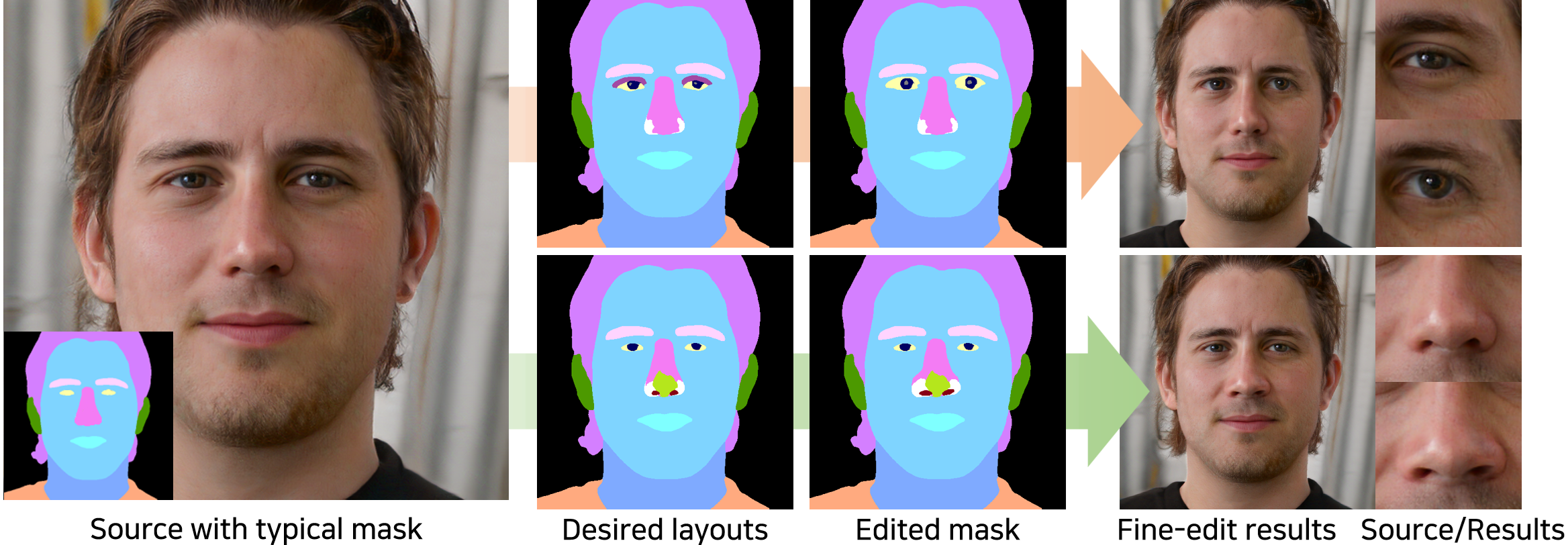

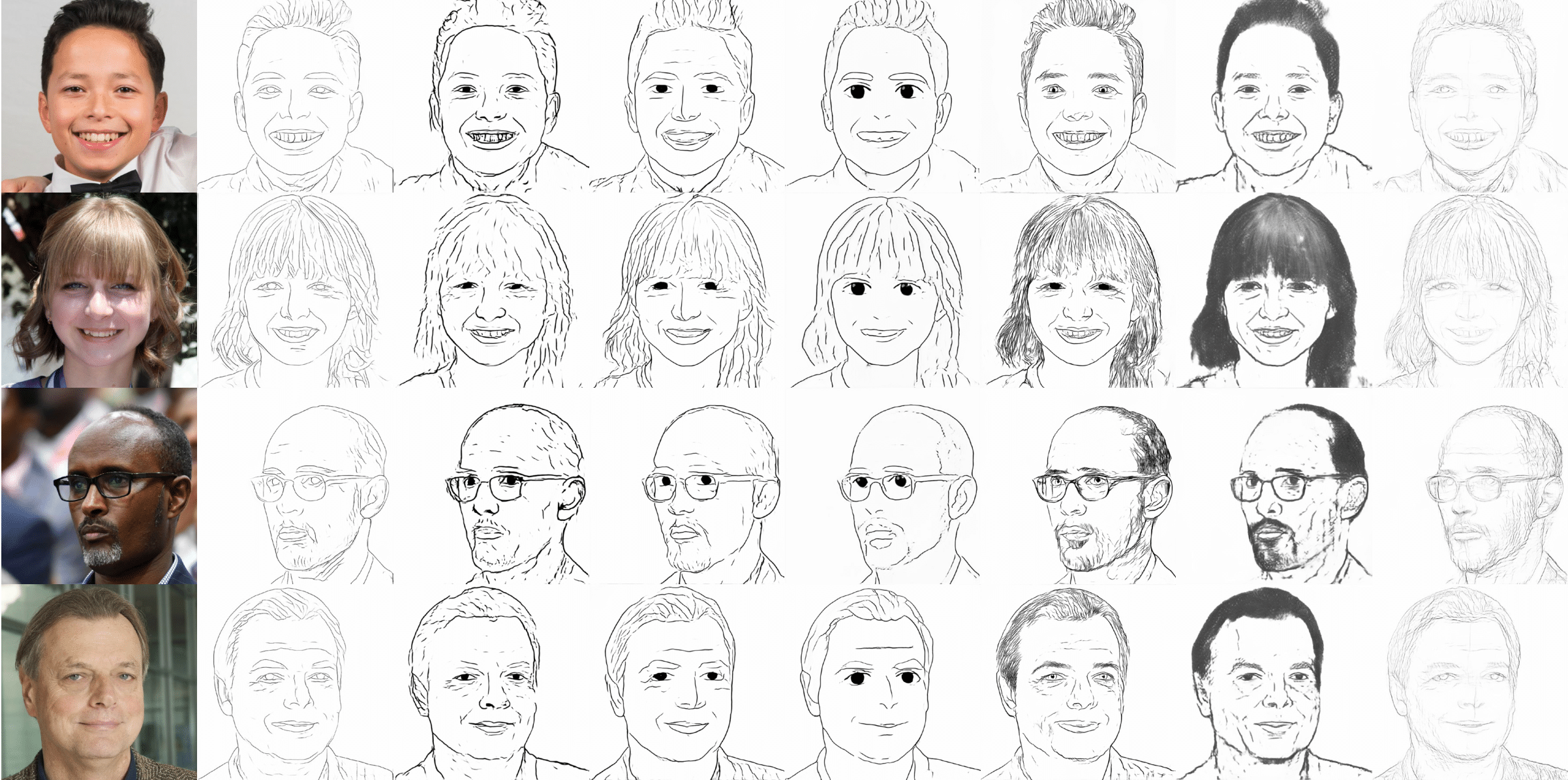

- AUG 2025: My research on face editing was featured in KAIST News.

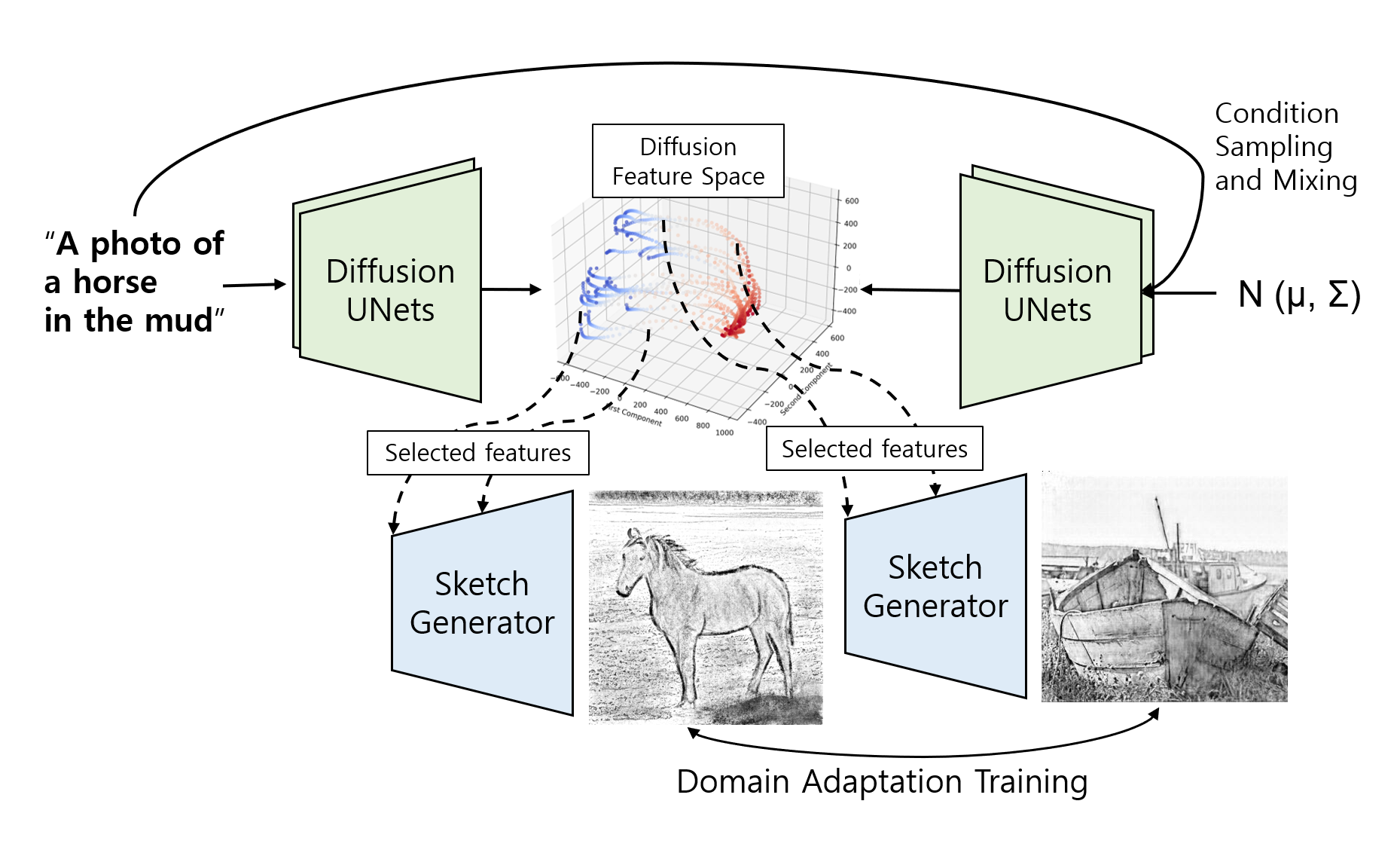

- JUNE 2025: I presented two first-authored papers at CVPR 2025 as the only Korean researcher.

- March 2025: I gave an invited talk on graphics applications of generative models at Konyang University.

- July 2024: I received the Best Master’s Thesis Award from the Korea Computer Graphics Society

Kwan Yun

Build human-centric generative models and leverage them to animate and edit using synthetic data.